Projects

Current

Autonomous Robot for Inspection in Unstructured Environments

(D-RIM: Dottorato di interesse nazionale in Robotics and Intelligent Machines – Research Studentship)

Data collection in unstructured environments is a challenge for many real-world applications, such as precision agriculture and industrial inspection. The main objective of this project is to develop an intelligent autonomous system for monitoring unstructured environments using a small autonomous robot. The proposed solution will facilitate the exploration of remote or difficult-to-access places with a mobile platform that integrates various sensors into a low-power embedded system. New hardware and software solutions will be developed to optimize the collection of information from places and objects of particular interest (such as plants or pipelines). The scientific contributions of this project will significantly advance the state of the art in the emerging research areas of TinyML (machine learning for resource-constrained systems) and embedded AI applied to robotics.

DARKO: Dynamic Agile Production Robots That Learn and Optimize Knowledge and Operations

(EU Horizon 2020 RIA, 101017274 – €490k)

This is a collaborative project, involving academic institutions and industrial partners across five European countries. DARKO aims to develop a new generation of agile intralogistics robots with energy-efficient actuators for highly dynamic motion, which can operate safely within changing environments, have predictive planning capabilities, and are aware of human intentions. We lead the project’s work package on Human-Robot Spatial Interaction (HRSI), including tasks for qualitative spatial modelling of human motion and causal inference for safe human-robot interaction, and contribute to other tasks for multi-sensor perception, human motion prediction, and robot intent communication.

Website: [https://cordis.europa.eu/project/id/101017274]

News: [Press release]

Active Robot Perception for Automated Potato Planting

(EPSRC CDT AgriFoRwArdS & Jersey Farmers Union – Research Studentship)

The project investigates new active robot perception solutions for the automation of potato planting at Jersey farms. Technologies for low-cost sensing and quality control of seed potatoes from the storage will be explored, including a camera on a robot manipulator to identify and estimate the pose of potatoes that need to be handled by the grasping end-effector. Opportune software solutions will be designed and implemented in the robot sensing system to be reasonably accurate and suitable for real outdoor scenarios. The project will also explore the possibility to combine visual and tactile information (i.e. camera + touch sensor) to improve the detection and handling of potatoes in crates. The robot perception system will be integrated to the final mobile platform.

Website: [https://agriforwards-cdt.blogs.lincoln.ac.uk/]

Former

ActiVis: Active Vision with Human-in-the-Loop for the Visually Impaired

(Google Faculty Research Award – $55k)

The research proposed in this project is driven by the need of independent mobility for the visually impaired. It addresses the fundamental problem of active vision with human-in-the-loop, which allows for improved navigation experience, including real-time categorization of indoor environments with a handheld RGB-D camera. This is particularly challenging due to the unpredictability of human motion and sensor uncertainty. While visual-inertial systems can be used to estimate the position of a handheld camera, often the latter must also be pointed towards observable objects and features to facilitate particular navigation tasks, e.g. to enable place categorization. An attention mechanism for purposeful perception, which drives human actions to focus on surrounding points of interest, is therefore needed. This project proposes a novel active vision system with human-in-the-loop that anticipates, guides and adapts to the actions of a moving user, implemented and validated on a mobile device to aid the indoor navigation of the visually impaired.

Website: [http://lcas.github.io/ActiVis]

News: [Press release] [Grantham Journal]

RASberry: Robotics and Automation Systems for berry production

(Norwegian University of Life Sciences & University of Lincoln)

The RASberry project will develop autonomous fleets of robots for in-field transportation to aid and complement human fruit pickers. In particular, the project will consider strawberry production in polytunnels. A solution for autonomous in-field transportation will significantly decrease strawberry production costs and be the first step towards fully autonomous robotic systems for berry production. The project is a collaboration between the Norwegian University of Life Sciences (NMBU) and University of Lincoln and will develop a dedicated mobile platform together with software components for fleet management, long-term operation and safe human robot collaboration in strawberry production facilities. In particular, our main task in this project is to develop, implement, and evaluate strategies for handling the safe and effective interaction of the mobile robots with human workers, including appropriate techniques for robust human detection and behaviour characterisation in real-world scenarios.

Website: [http://rasberryproject.com]

News: [Horticulture Week]

FLOBOT: Floor Washing Robot for Professional Users

(EU Horizon 2020 IA, 645376 – €306k)

This is a collaborative project, involving academic institutions and industrial partners across five European countries. The project will develop a floor washing robot for industrial, commercial, civil and service premises, such as supermarkets and airports. Floor washing tasks have many demanding aspects, including autonomy of operation, navigation and path optimization, safety with regards to humans and goods, interaction with human personnel, easy set-up and reprogramming. FLOBOT addresses these problems by integrating existing and new solutions to produce a professional floor washing robot for wide areas. Our research contribution in this project focuses in the area of robot perception, based on laser range-finder and RGB-D sensors, for human detection, tracking and motion analysis in dynamic environments. Primary tasks include developing novel algorithms and approaches for enabling the acquisition, maintenance and refinement of multiple human motion trajectories for collision avoidance and path optimization, as well as integration of the algorithms with the robot navigation and on-board floor inspection system.

Website: [https://cordis.europa.eu/project/id/645376]

Videos: [People tracking]

News: [Press release] [Ottoetrenta]

ENRICHME: Enabling Robot and assisted living environment for Independent Care and Health Monitoring of the Elderly

(EU Horizon 2020 RIA, 643691 – €543k)

This is a collaborative project, involving academic institutions, industrial partners and charity organizations across six European countries. It tackles the progressive decline of cognitive capacity in the ageing population proposing an integrated platform for Ambient Assisted Living (AAL) with a mobile robot for long-term human monitoring and interaction, which helps the elderly to remain independent and active for longer. The system will contribute and build on recent advances in mobile robotics and AAL, exploiting new non-invasive techniques for physiological and activity monitoring, as well as adaptive human-robot interaction, to provide services in support to mental fitness and social inclusion. Our research contribution in this project focuses in the area of robot perception and ambient intelligence for human tracking and identity verification, as well as physiological and long-term activity monitoring of the elderly at home. Primary tasks include developing novel algorithms and approaches for enabling the acquisition, maintenance and refinement of models to describe human motion behaviors over extended periods. as well as integration of the algorithms with the AAL system.

Website: [https://cordis.europa.eu/project/id/643691]

Videos: [PUMS home] [AKTIOS home] [Fondazione Don Gnocchi lab] [LACE demo] [RFID object detection]

News: [Press release 1] [The Times] [Press release 2] [ BBC Look North] [Horizon Magazine] [BBC Future] [PBS]

Mobile Robotics for Ambient Assisted Living

(University of Lincoln, Research Investment Fund – Research Studentship)

The life span of ordinary people is increasing steadily and many developed countries, including UK, are facing the big challenge of dealing with an ageing population at greater risk of impairments and cognitive disorders, which hinder their quality of life. Early detection and monitoring of human activities of daily living (ADLs) is important in order to identify potential health problems and apply corrective strategies as soon as possible. In this context, the main aim of the current research is to monitor human activities in an ambient assisted living (AAL) environment, using a mobile robot for 3D perception, high-level reasoning and representation of such activities. The robot will enable constant but discrete monitoring of people in need of home care, complementing other fixed monitoring systems and proactively engaging in case of emergency. The goal of this research will be achieved by developing novel qualitative models of ADLs, including new techniques for 3D sensing of human motion and RFID-based object recognition. This research will be further extended by new solutions in long-term human monitoring for anomaly detection.

RFID-based Object Localization with a Mobile Robot to Assist the Elderly with Mild Cognitive Impairments

(University of Lincoln – Research Studentship)

Solutions for Active and Assisted Living (AAL) like smart sensors and assistive robots are being developed and tested to help the elderly at home. However, it is still very difficult for a robot to understand the context in which its services are supposed to be delivered, even for simple tasks like finding the keys left by a person somewhere in the house. These are practical problems affecting the life of many elderly people, in particular those suffering Mild Cognitive Impairments (MCI). Radio-frequency identification (RFID) technology is a viable option for locating objects in domestic environments. The main aim of this research is therefore to develop a system for a mobile robot to detect and map the position of RFID-tagged objects, exploiting its capability to explore the house and sense the environment from multiple locations. The system will be evaluated in the lab and in real elderly houses.

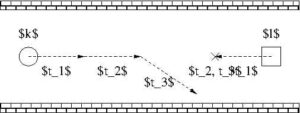

Qualitative Representations for Human Robot Spatial Interaction

(University of Lincoln – Staff Development Fund)

Personal robots should provide a range of dedicated services for entertainment, home assistance, etc. Several challenges need to be addressed in order for robots to move around humans swiftly, safely and politely. The Qualitative Trajectory Calculus (QTC) is a powerful method to abstract a large number of possible scenarios for Human-Robot Spatial Interaction (HRSI). In this research, computational models based on QTC are developed to analyse and implement HRSIs for robot’s behaviours that are socially acceptable.

Video: [IRSS 2012 Presentation]

HERMES: Human-Expressive Representations of Motion and their Evaluation in Sequences

(University of Oxford – EU FP6-IST, 027110)

HERMES concentrates on how to extract descriptions of human behaviour from videos in a circumscribable discourse domain, such as: (i) pedestrians crossing inner-city roads and pedestrians approaching or waiting at stops of busses or trams, and (ii) humans in indoor worlds like an airport hall, or a train station. These discourse domains allow exploring a coherent evaluation of human movements and facial expressions across a wide variation of scale. This general approach lends itself to various cognitive surveillance scenarios at varying degrees of resolution: from wide-field-of-view multiple-agent scenes, through to more specific inferences of emotional state that could be elicited from high-resolution imagery of faces. In this research, cognitive architectures and active perception algorithms, which link high-level reasoning to low-level sensing, are implemented on distributed and mobile camera systems. In particular, the cognitive active vision system in this research provides a natural language interpretation of the observed scene, and uses this information to close the action-perception loop at semantic level.

Website: [http://cordis.europa.eu/project/rcn/81157_en.html]

Videos: [Cognitive Active Vision 1] [Cognitive Active Vision 2]

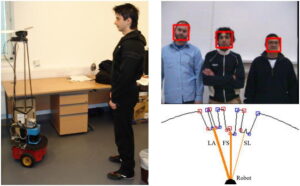

Multisensor People Tracking and Recognition with Mobile Robots

(University of Essex – Research Studentship)

In order to operate in human environments, mobile robots must be able to detect, track and recognize people. These are important for the robot to navigate safely and provide user-oriented services. Since humans and animals in general make use of a variety of sensory inputs to perceive the world, it seems quite natural to follow a similar approach with robots, and integrate the information provided by different sensors (e.g. camera and laser). This research aims to implements multisensor solutions to perform simultaneous human tracking and recognition, so that the former can improve the latter, and vice-versa. Probabilistic approaches and Bayesian estimators, such as Kalman or Particle filters, are applied in this research.

Video: [Tracking and Recognition]